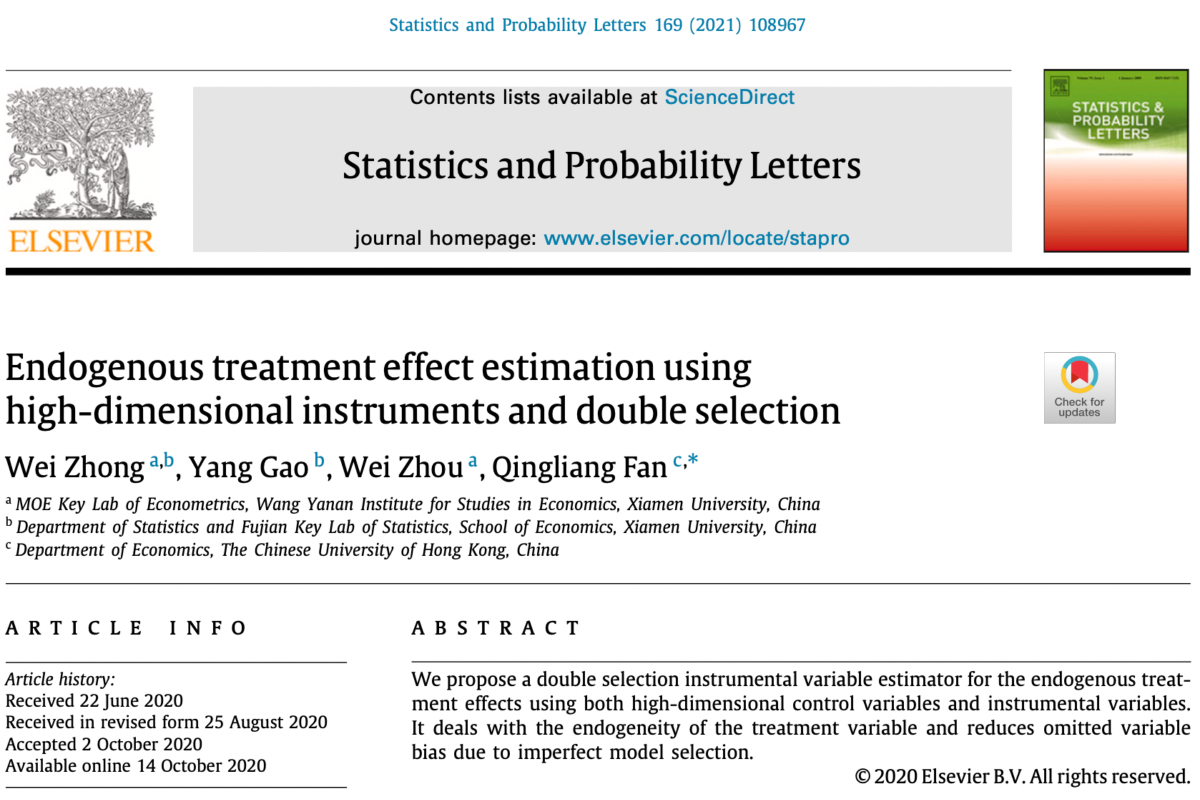

Zhong, W., Zhou, W., Fan, Q., & Gao, Y. (2022). Dummy endogenous treatment effect estimation using high‐dimensional instrumental variables. Canadian Journal of Statistics, 50, 795-819.

We develop a two-stage approach to estimate the treatment effects of dummy endogenous variables using high-dimensional instrumental variables (IVs). In the first stage, instead of using a conventional linear reduced-form regression to approximate the optimal instrument, we propose a penalized logistic reduced-form model to accommodate both the binary nature of the endogenous treatment variable and the high dimensionality of the IVs. In the second stage, we replace the original treatment variable with its estimated propensity score and run a least-squares regression to obtain a penalized logistic regression instrumental variables estimator (LIVE). We show theoretically that the proposed LIVE is root-n consistent with the true treatment effect and asymptotically normal. Monte Carlo simulations demonstrate that LIVE is more efficient than existing IV estimators for endogenous treatment effects. In applications, we use LIVE to investigate whether the Olympic Games facilitate the host nation’s economic growth and whether home visits from teachers enhance students’ academic performance. In addition, the R functions for the proposed algorithms have been developed in an R package naivereg. The Canadian Journal of Statistics 50: 795–819;